AI must be elevated from scattered experiments to robust production solutions on a large scale. A crucial element in this transition is increased maturity in developing and operating AI models using MLOps, the AI version of DevOps. This provides the company with a documented, optimised, and secure process that delivers significant business benefits.

2023 was the year when interest in AI exploded, thanks to the launch of OpenAI’s ChatGPT in November 2022. However, it is only now that companies are beginning to work structurally and purposefully with AI to achieve sustainable benefits. A McKinsey report from May 30, 2024, indicates that the use of AI increased to 72% globally, compared to around 50% in the previous five years. At the same time, over 50% of the surveyed companies use AI in more than one function, compared to under 30% in 2023.

From Manual to MLOps

More importantly, the approach to AI has also changed. Initially, it involved limited and scattered experiments to gather experience. The next level is implementing AI on a larger scale to fully leverage technological opportunities. In other words, AI must be scaled up to full production solutions.

This means that the development of AI solutions should follow the same systematic approach used for years in software development. Automated build, test, and monitoring processes should also apply to AI solutions.

The method is called MLOps, the AI version of DevOps – Development and Operations. MLOps focuses on streamlining processes, transitioning from manual AI experiments to solutions that are deployed, maintained, and monitored in production.

Currently, many experience that the output produced by a data scientist is manually put into production. This can easily lead to errors, and solutions are only monitored to a limited extent. Transitioning to a streamlined process is necessary to ensure an effective and robust product where one can rely on the models to perform as expected and handle the required response and uptime when serving customers, for example.

Microsoft Recommends 7 Key Principles for MLOps:

- Use version control for all code and data for full traceability.

- Use multiple environments (development, testing, and production) to catch errors before they cause problems.

- Maintain infrastructure as code (IaC) and under version control.

- Ensure full traceability of experiments, answering which data, which versions, and what output.

- Automatically test code, data integrity, and model integrity. Errors are difficult to spot manually.

- Use Continuous Integration (CI) and Continuous Delivery (CD) from classic DevOps to automatically build and test code and models.

- Monitor services, models, and data. Data and models can become unreliable over time, making monitoring critical.

Traceability is a crucial factor for transparency and compliance in MLOps. It means being able to explain at any time why a given model behaves as it does and what code and data were used to train it. Additionally, test reports prove that everything has been done to validate that things work as they should. Traceability is one of the requirements in the EU’s AI Act regulation.

Combining Off-the-Shelf and Custom Development

Today, AI is often a mix of traditional software code, APIs, self-built or fine-tuned components, and models from cloud providers or open source communities. Microsoft, with co-pilots and various Azure services, offers both ready-made apps and components that function as building blocks for a complete solution.

This approach means that there are many versions of things that need to fit together. Therefore, orchestrating versions, dependencies, and automatically testing the overall solution becomes critical to ensure practical stability.

MLOps is a Journey

MLOps – and DevOps – can be a complicated process to start. Besides the technical skills and processes needed to create a robust product, MLOps must also handle the more uncertain side of AI. There must be time and resources to initially experiment with data and models until a functioning and secure product is achieved.

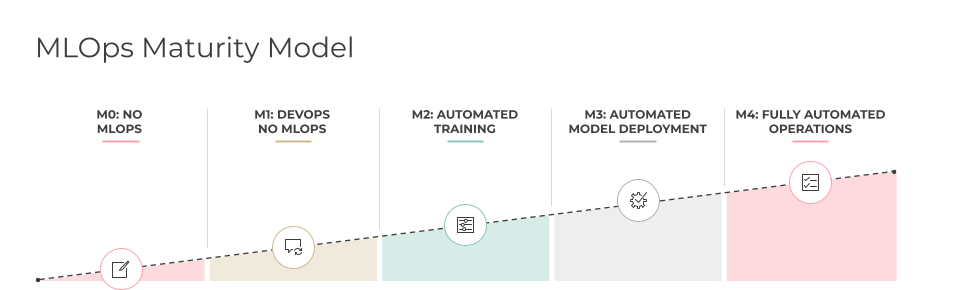

It is, therefore, a good idea to introduce MLOps as a series of improvements based on the maturity level one starts at. The following figure shows five maturity levels based on data from Microsoft.

Examples of MLOps in Practice

How can MLOps be beneficial in practice? Here are some examples of companies using MLOps to optimise their models:

- A retail company analyses the optimal location for stores.

- An online company uses models to understand user behaviour and offer the right products.

- A streaming service processes user data to optimise content for the individual user.

- A medical research unit aims to automate the analysis of enormous amounts of data.

Common to these solutions is that MLOps ensures that one not only has an AI model that can solve a problem but also that the model works robustly in production and is continuously monitored to solve the defined task.

Devoteam Cloud Enabler for MLOps

Devoteam offers a method and an accelerator to implement MLOps in Azure, focusing on time-to-market and security in solutions. Our accelerator is based on the latest Azure components, including Azure Machine Learning, Azure OpenAI, and Azure Kubernetes Service. We call our method Cloud Enabler for MLOps.

It includes method, documentation, infrastructure, MLOps automation, monitoring, and a number of jobs that show how to build pipelines end-to-end. Cloud Enabler for MLOps supports both simple machine learning models and more advanced scenarios such as fine-tuning a large language model (LLM). The product built is, for most models, a container with an API that can be hosted in the Azure Cloud or on-premise if preferred.

A fundamental principle in our approach is to help the customer quickly establish a platform for MLOps. At the same time, we ensure that the customer becomes self-sufficient with the right knowledge and method to work independently.

Are you planning to elevate AI to the next level in your organisation and create robust AI solutions using MLOps? Whether you need to discuss specific needs and challenges in your business or just have questions about the article, feel free to contact us, and we will get back to you as soon as possible.